Understanding the Basics

The fast-paced evolution of AI technology unites powerful algorithms with computation. Transfer learning is capable of handling enormous amounts of data reasonably. The process of developing AI models involves challenges.

Such as having large amounts of data for training the model. This is exactly why transfer learning provides an effective and novel solution.

Transfer learning allows AI not to start over each time. But to use what it learnt before to help create new applications or products.

What is Transfer Learning?

Transfer learning is essentially an AI machine learning method. Reusing a pre-trained model. To develop a new machine learning model for a similar task, yet different from the original task.

Example: If a person is willing to learn to drive a truck. They do not have to have driven a truck; they only have basic driving skills.

The idea is to take valuable elements from the source domain. It helps with an unsupervised learning task. Such as dimensionality reduction or clustering, in the target domain.

This would simply build upon driving skills learnt before. You simply have to make a few adjustments for the new vehicle’s size and mechanics.

In AI, we refer to this “existing knowledge” as a pre-trained model. This model is the result of deep learning on a very large dataset. For a particular task, like object detection in images.

Instead of training a new model from scratch, you take a pretrained model. Adjust it so that it does a different but related task. In this case, detecting the species of birds in photographs.

The pre-trained model has already learnt. How to detect simple features, like edges, objects, and textures.

You are really just pulling from that learned feature set as your new base. This is a great idea, because it means you don’t have to collect and label millions of new data points.

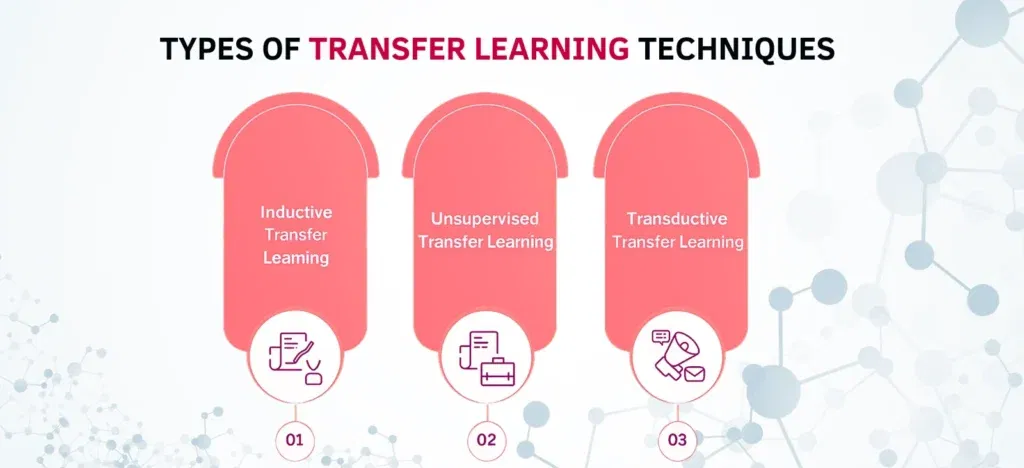

Types of Transfer Learning

While the work of reusing a pre-trained model is essentially. The same from your perspective. Transfer learning usually has a few different types related to it.

As you will see, there is a similarity of the source and target tasks and between the source and target domains.

Inductive Transfer Learning

This is the most common type, where the source and target tasks are different. The source domain may or may not have labeled data.

Developers typically implement this by either using a pre-trained model. As a feature extractor or by fine-tuning some of its layers.

Transductive Transfer Learning

In this learning scenario, both the source and target tasks involve themselves. Those are the same, but the domains are different.

Consider, for instance, a sentiment analysis model that uses product reviews. From one website as the source domain and attempts.

To analyze sentiment on reviews from another website as the target domain. In this case, we label the source domain product reviews. The target domain reviews remain unlabelled.

Unsupervised Transfer Learning

As this is a more advanced type, the challenge escalates. As there is no labelled data in either the source or target domains.

The aim is to extract valuable components from the source domain. To assist in an unsupervised learning task in the target domain. Such as dimensionality reduction or clustering.

How Does Transfer Learning Work?

Like with any task, transfer learning consists of three critical actions:

Selecting a Pre-trained Model

This step starts with the selection of a pre-trained model. That must have been trained on a large and more general dataset. A well-known example is the image classification CNN models trained on ImageNet.

This is a large database of millions of images. Researchers have trained such models to recognise different types of images. A well-known example is the image classification CNN models that researchers trained on ImageNet.

Freezing the Base Layers

A neural network is composed of several layers.

- The lower layers of a pre-trained model typically learn basic features, e.g., lines and colours.

- The intermediate layers learn more complex patterns (e.g., shapes, textures).

- The final layers learn specific object classification.

In transfer learning, it is common to “freeze” the lower and intermediate layers. Which means their weights will not be updated or modified in the next training. This preserves a useful layer of understanding that the model has acquired.

Fine-Tuning the Final Layers

The pre-trained model’s final classification layers are replaced. With new, untrained layers specific to your task. For example, if a model had before classified 1,000 objects.

Its last layer may be changed to classify. The five categories of animals your problem is concerned with. The model is retrained on the smaller prepared data set.

Since only the final layers undergo training. The operation is much faster, and the model is more efficient, requiring a smaller dataset.

Advantages and Disadvantages

While transfer learning has created new opportunities in machine learning. It is important to balance the benefits with the possible disadvantages.

Advantages:

- Reduced Training Time:

The first advantage is that there is no need to complete the full process. Developing a neural network from scratch can take a lot of time and effort.

- Less Data Required:

Transfer learning means developers can create models that perform well. Even if they only have a small dataset for their specific task. This happens regularly with many types of industry applications.

- Improved Model Performance:

Since researchers trained the pre-trained model on a range of general features. The resulting model will typically be more robust. Also, it performs better than one trained from scratch on a small dataset.

- Lower Computational Costs:

Because transfer learning mitigates the need for costly high-end technology. Compute resources commonly enable innovations and development in advanced AI. Which may have been otherwise disrupted through barriers of access.

Disadvantages:

- Negative Transfer:

Using transfer learning has the possibility of resulting in a negative transfer. If the source and target tasks only have a vague relationship. Also, potentially lower the model prediction and performance on the new.

- Choosing the Right Model:

The success of transfer learning is predicated on the ability to choose. Based on your new problem, a pre-trained model that has learnt features. Those are related to what you want to transfer. This can sometimes be hard to assess.

- Model Complexity:

Although there is some benefit in terms of less computational cost. The pre-trained models to learn in this manner can also be very large and complicated.

This can lead to the deployment of heavy and difficult models. It is configured in constrained environments.

Want to learn a structured understanding of how such technologies apply within marketing. Learn how to build a complete campaign. Joining an institute of digital marketing with Digital CourseAI. Can provide practical insights and hands-on training.

Transfer Learning Use Cases

The real strength of transfer learning manifests itself. In practice, it has broad and impactful applications to a plethora of industries.

Computer Vision

Transfer learning is most common in this field. One will often use pretrained models. Like VGG, ResNet, and Start, which have been trained on large image datasets for:

- Medical Imaging: For a small corpus of labeled medical images. A model trained on default imaging can be adapted to observe for tumors and fractures in scans.

- Object Detection: A model trained on a diverse set of objects can be modified to only recognise. Too few specific objects in the factory for an automated quality control process.

Natural Language Processing (NLP)

Transfer learning has significantly changed the space of NLP. This results in some powerful models being made possible.

- Sentiment Analysis: By training large corpora of text. We can develop broad language models. Which we can subsequently fine-tune on a much smaller scale. Labeled datasets to detect the sentiment of customer reviews and social media write-ups.

- Machine Translation: With less popular language pairs, models are built for many languages. It can be adjusted to refine accuracy for translations.

Audio and Speech Recognition

Audio and speech recognition technology has come a long way and is in general use today. Dialects and less popular languages are still simplified languages.

They have unique grammatical or phonetic aspects. These are different from their parent languages. Speech recognition for popular and widely spoken languages. It is easier than for less popular dialects or languages.

Conclusion

In modern times, transfer learning serves as a potent technique. It assists developers in creating robust AI applications.

With minimal data and computational demands, it is possible to leverage pre-trained models. This approach not only accelerates the creation process.

But also increases the availability of AI technology. Learning and understanding transfer learning will pave the way. Towards innovations in artificial intelligence.

Also Read: Pizza Hut Marketing Case Study 2025: How a $600 Startup Became a Global Pizza Powerhouse Through Digital Innovation